Explore our credit programs for startups and researchers.

GPU Cloud

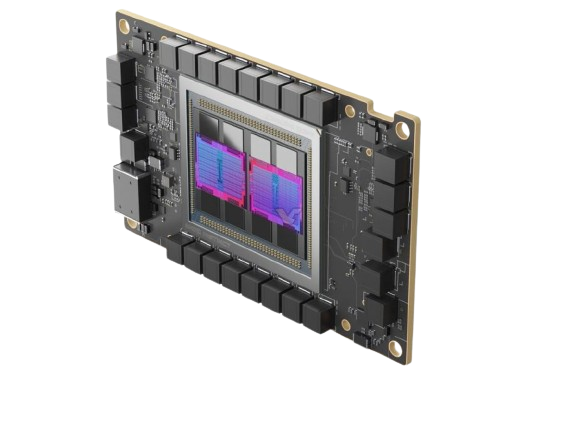

AMD MI250

128 GB

1.6 Tbps Network

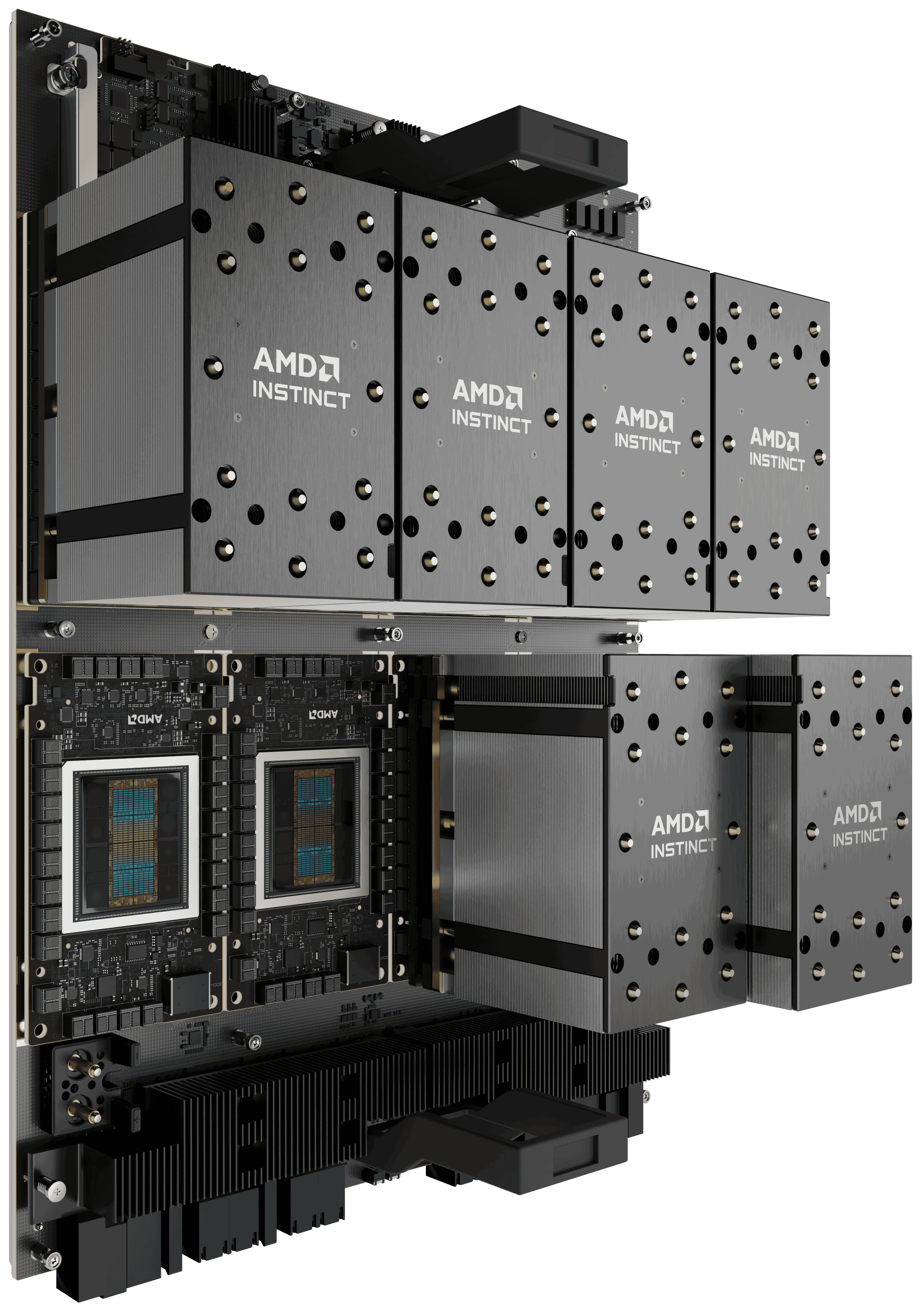

AMD Instinct™ MI300X

On-Demand MI300X GPUs available.

Scale up to hundreds of MI300X on-demand.

Deploy On-Demand1-month to 3-year reservations available.

Schedule a time with our team to learn more.

Book a CallMI300X | H100 SXM | |

|---|---|---|

VRAM | 192 GB | 80 GB |

Memory Bandwidth | 5.3 TB/s | 3.4 TB/s |

FP64 | 81.7 TFLOPs | 67 TFLOPs |

FP32 | 163.4 TFLOPs | 67 TFLOPs |

FP16 | 2,610 TFLOPs | 1,979 TFLOPs |

FP8 | 5,220 TFLOPs | 3,958 TFLOPs |

Interconnect GPU to GPU | PCIe 128 GB/s | NVLink 900 GB/s PCIe 128 GB/s |

Network | 3.2 Tb/s | - |

AMD Instinct™ MI250

1-month to 3-year reservations available.

Schedule a time with our team to learn more.

On-Demand options coming soon.

Arriving as early as August 2024.

Book a CallMI250 | A100 SXM | |

|---|---|---|

VRAM | 128 GB | 80 GB |

Memory Bandwidth | 3.2 TB/s | 2 TB/s |

FP64 / FP32 | 45.3 TFLOPs | 19.5 TFLOPS |

FP16 | 362 TFLOPs | 312 TFLOPs |

Interconnect GPU to GPU | PCIe 100 GB/s | NVLink 600 GB/s PCIe 64 GB/s |

Network | 1.6 Tb/s | - |

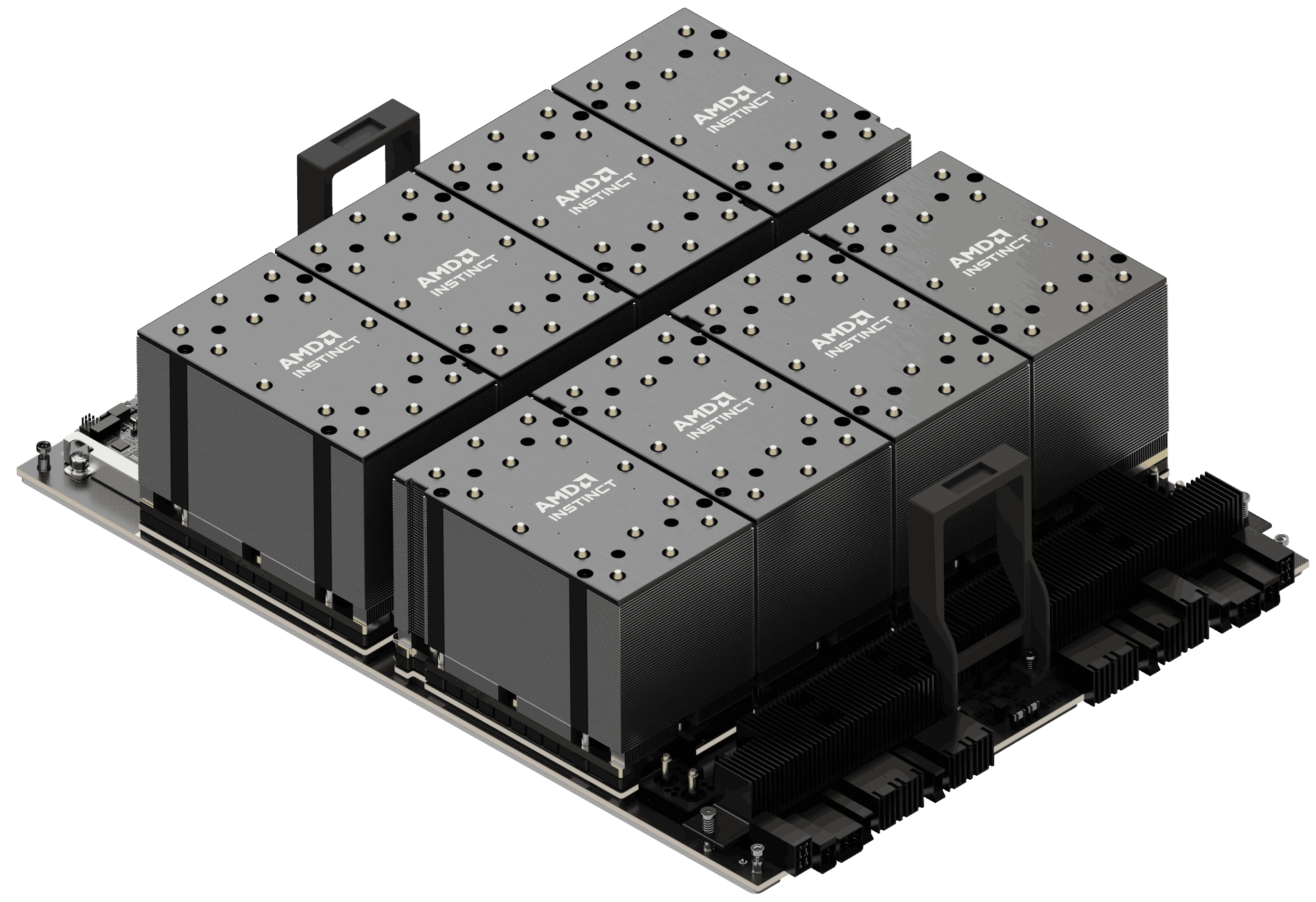

Scale up to 10,000+ GPUs seamlessly

Scale quickly to thousands of GPUs without sacrificing cost or reliability.