Instant Clusters: Available instantly, no contract required.

.avif)

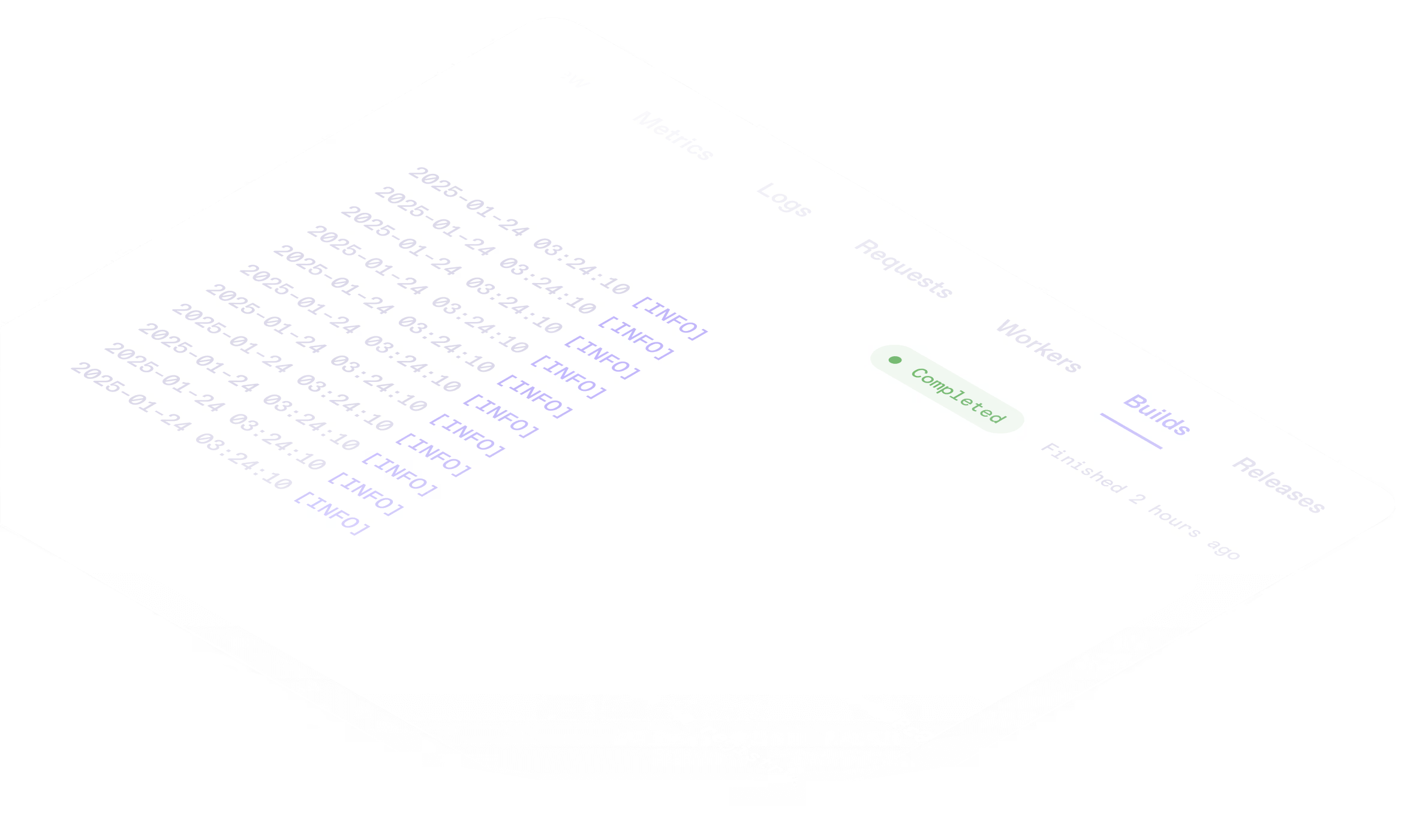

Launch in minutes.

Pay by the second.

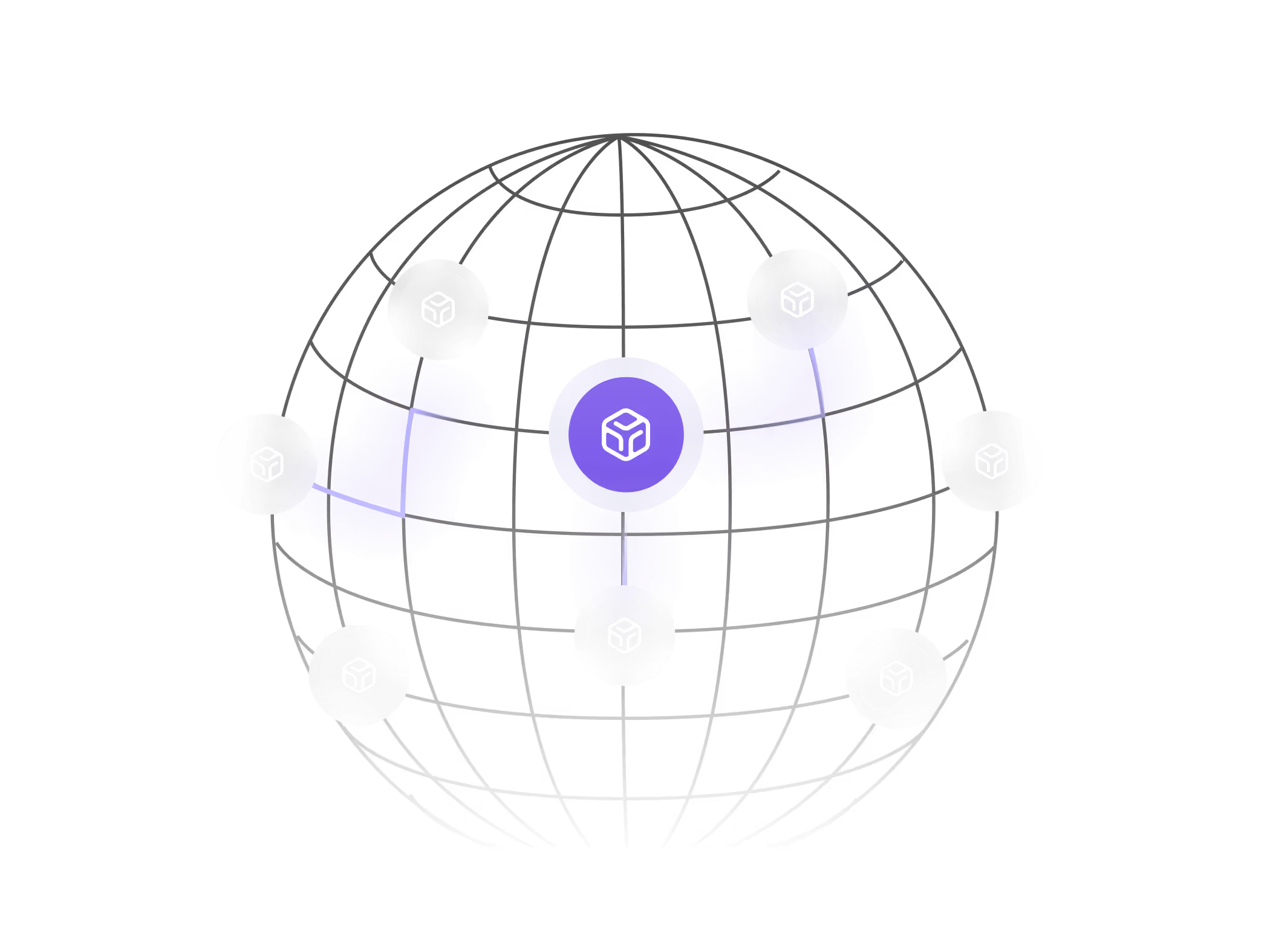

Scale globally.

Reserved Clusters: Dedicated capacity for large-scale workloads.

.webp)

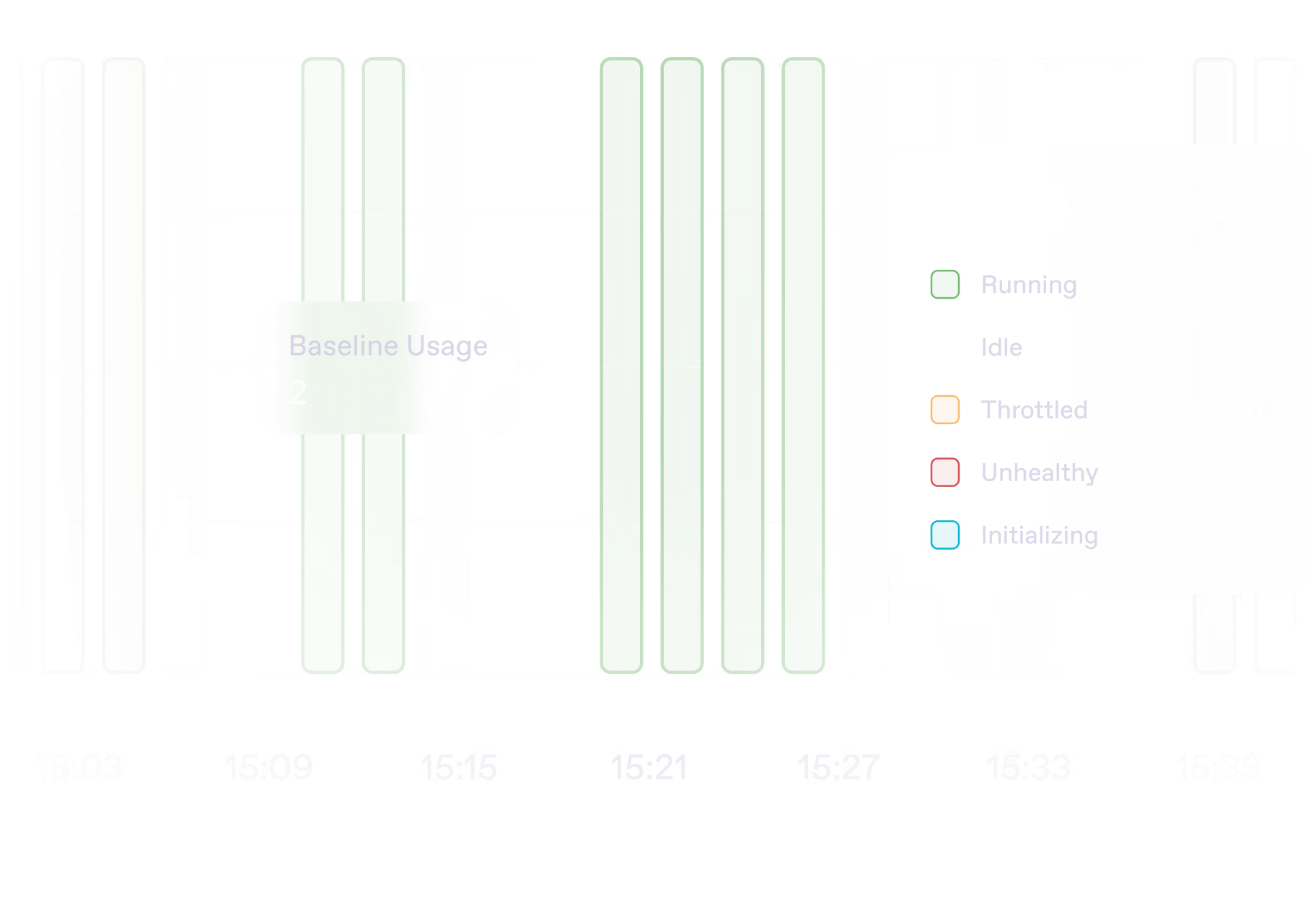

Uptime guarantee

Secure by default

Scale to thousands of GPUs

"The Runpod team has clearly prioritized the developer experience to create an elegant solution that enables individuals to rapidly develop custom AI apps or integrations while also paving the way for organizations to truly deliver on the promise of AI."

"Runpod is the only place I can deploy high-end GPU models instantly—no sales calls, no rate limits, no nonsense."

“The main value proposition for us was the flexibility Runpod offered. We were able to scale up effortlessly to meet the demand at launch.”

“Runpod helped us scale the part of our platform that drives creation. That’s what fuels the rest—image generation, sharing, remixing. It starts with training.”

Trusted by today's leaders, built for tomorrow's pioneers.

Questions? Answers.