Built for open source.

Discover, fork, and contribute to community-driven projects.

One-click deployment.

Skip the setup—launch any package straight from GitHub.

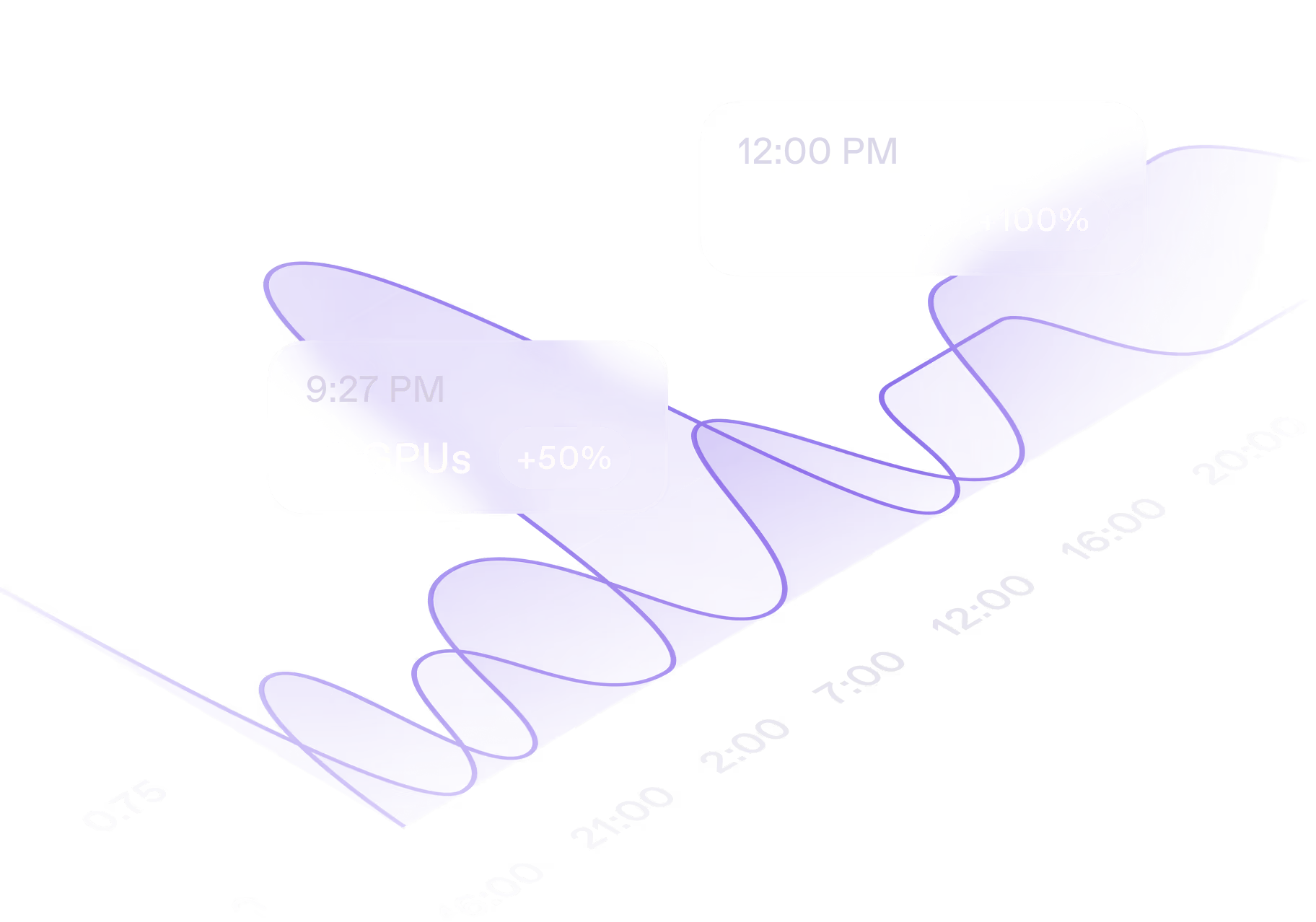

Autoscaling endpoints.

Deploy autoscaling endpoints from community templates.

How it Works

From code to cloud.

Deploy, scale, and manage your entire stack

in one streamlined workflow.

Public endpoints

Access ready-to-use public AI endpoints.

Test, integrate, and deploy without provisioning your own infrastructure.

Community

Join the community.

Build, share, and connect with thousands

@

YuvrajS9886

Introducing SmolLlama! An effort to make a mini-ChatGPT from scratch! Its based on the Llama (123 M) structure I coded and pre-trained on 10B tokens (10k steps) from the FineWeb dataset from scratch using DDP (torchrun) in PyTorch. Used 2xH100 (SXM) 80GB VRAM from Runpod

@

Yoeven

The @runpod_io event was amazing! One reason we can boast about fast speeds at @jigsawstack is because the cold boot on runpod GPUs is basically nonexistent!

@

Dean Jones

Runpod has great prices as well

@

dfranke

Shoutout to @runpod_io as I work through my first non-trivial machine learning experiment. They have exactly what you need if you're a hobbyist and their prices are about a fifth of the big cloud providers.

@

othocs

@runpod_io is so goated, first time trying it today and it’s super easy to setup + their ai helper on discord was very helpful If you ever need cpus/gpus I recommend it!

@

jzlegion

ai engineering is just tweaking config values in a notebook until you run out of runpod credits

@

SuperHumanEpoch

I have been testing work with @runpod_io last 2 weeks and I've to say the service is pretty amazing. Super awesome UX and DevEX (and plenty of GPU backend choices). It's about ~20% pricier than Lambda labs, but worth it IMO given all the harness and workflow they provide that Lambda doesn't. I'm not associated with them in any way or manner, btw. Just a very happy customer.

@

Dwayne

Just discovered @runpod_io 🤯🤯🤯 Per second billing for serverless GPU capacity?! Infinitely scalable?! Whaaaat

@

rachel

thank u runpod i was doing a training run for work when GCP and cloudflare died 🙏🙏 i appreciate u staying online it finished successfully

@

SaaS Wiz

I love runpod

@

AlicanKiraz0

Runpod > Sagemaker, VertexAi, AzureML

@

SkotiVi

For anyone annoyed with Amazon's (and Azure's and Google's) gatekeeping on their cloud GPU VMs, I recommend @runpod_io None of the 'prove you really need this much power' bs from the majors Just great pricing, availability, and an intuitive UI

.webp)

@

skypilot_org

🏃 RunPod is now available on SkyPilot! ✈️ Get high-end GPUs (3x cheaper) with great availability: sky launch --gpus H100 Great thanks to @runpod_io for contributing this integration to join the Sky!

@

qtnx_

1.3k spent on the training run, this latest release would not have been possible without runpod

@

DrRogerThomp

Trained a 7B parameter model in just 90 minutes for $0.80 using LoRA + Runpod.

Yes, it’s possible—and no, you don’t need enterprise hardware.

@

winglian

Axolotl works out of the box with @runpod_io's Instant Clusters. It's as easy as running this on each node using the Docker images that we ship.

.webp)

@

Mascobot

Apparently, we got a Kaggle silver medal in the @arcprize for being in position 17th out of 1430 teams 🙃 I wish I had more time to spend on it; we worked on it for a couple of weeks for fun with limited compute (HUGE thanks to @runpod_io!)

@

oliviawells

Needed a GPU for a quick job, didn’t want to commit to anything long-term. RunPod was perfect for that. Love that I can just spin one up and shut it down after.

@

berliangor

i'm a big fan of @runpod_io they're most reliable GPU provider for training and running your models at scale

@

DataEatsWorld

Thanks @runpod_io, loving all of the updates! 👀

@

abacaj

Runpod support > lambdalabs support. For on demand GPUs runpod still works the best ime

@

casper_hansen_

Why is Huggingface not adding RunPod as a serverless provider? RunPod is 10-15x cheaper for serverless deployment than AWS and GCP

@

Pauline_Cx

I'm proud to be part of the GPU Elite, awarded by @runpod_io 😍

@

Yoeven

The @runpod_io event was amazing! One reason we can boast about fast speeds at @jigsawstack is because the cold boot on runpod GPUs is basically nonexistent!

@

DataEatsWorld

Thanks @runpod_io, loving all of the updates! 👀

@

dfranke

Shoutout to @runpod_io as I work through my first non-trivial machine learning experiment. They have exactly what you need if you're a hobbyist and their prices are about a fifth of the big cloud providers.

@

Pauline_Cx

I'm proud to be part of the GPU Elite, awarded by @runpod_io 😍

.webp)

@

Mascobot

Apparently, we got a Kaggle silver medal in the @arcprize for being in position 17th out of 1430 teams 🙃 I wish I had more time to spend on it; we worked on it for a couple of weeks for fun with limited compute (HUGE thanks to @runpod_io!)

@

SuperHumanEpoch

I have been testing work with @runpod_io last 2 weeks and I've to say the service is pretty amazing. Super awesome UX and DevEX (and plenty of GPU backend choices). It's about ~20% pricier than Lambda labs, but worth it IMO given all the harness and workflow they provide that Lambda doesn't. I'm not associated with them in any way or manner, btw. Just a very happy customer.

@

AlicanKiraz0

Runpod > Sagemaker, VertexAi, AzureML

@

berliangor

i'm a big fan of @runpod_io they're most reliable GPU provider for training and running your models at scale

.webp)

@

skypilot_org

🏃 RunPod is now available on SkyPilot! ✈️ Get high-end GPUs (3x cheaper) with great availability: sky launch --gpus H100 Great thanks to @runpod_io for contributing this integration to join the Sky!

@

SaaS Wiz

I love runpod

@

jzlegion

ai engineering is just tweaking config values in a notebook until you run out of runpod credits

@

rachel

thank u runpod i was doing a training run for work when GCP and cloudflare died 🙏🙏 i appreciate u staying online it finished successfully

@

SkotiVi

For anyone annoyed with Amazon's (and Azure's and Google's) gatekeeping on their cloud GPU VMs, I recommend @runpod_io None of the 'prove you really need this much power' bs from the majors Just great pricing, availability, and an intuitive UI

@

casper_hansen_

Why is Huggingface not adding RunPod as a serverless provider? RunPod is 10-15x cheaper for serverless deployment than AWS and GCP

@

qtnx_

1.3k spent on the training run, this latest release would not have been possible without runpod

@

oliviawells

Needed a GPU for a quick job, didn’t want to commit to anything long-term. RunPod was perfect for that. Love that I can just spin one up and shut it down after.

@

DrRogerThomp

Trained a 7B parameter model in just 90 minutes for $0.80 using LoRA + Runpod.

Yes, it’s possible—and no, you don’t need enterprise hardware.

@

othocs

@runpod_io is so goated, first time trying it today and it’s super easy to setup + their ai helper on discord was very helpful If you ever need cpus/gpus I recommend it!

@

Dwayne

Just discovered @runpod_io 🤯🤯🤯 Per second billing for serverless GPU capacity?! Infinitely scalable?! Whaaaat

@

YuvrajS9886

Introducing SmolLlama! An effort to make a mini-ChatGPT from scratch! Its based on the Llama (123 M) structure I coded and pre-trained on 10B tokens (10k steps) from the FineWeb dataset from scratch using DDP (torchrun) in PyTorch. Used 2xH100 (SXM) 80GB VRAM from Runpod

@

Dean Jones

Runpod has great prices as well

@

winglian

Axolotl works out of the box with @runpod_io's Instant Clusters. It's as easy as running this on each node using the Docker images that we ship.

@

abacaj

Runpod support > lambdalabs support. For on demand GPUs runpod still works the best ime

@

casper_hansen_

Why is Huggingface not adding RunPod as a serverless provider? RunPod is 10-15x cheaper for serverless deployment than AWS and GCP

@

SkotiVi

For anyone annoyed with Amazon's (and Azure's and Google's) gatekeeping on their cloud GPU VMs, I recommend @runpod_io None of the 'prove you really need this much power' bs from the majors Just great pricing, availability, and an intuitive UI

@

AlicanKiraz0

Runpod > Sagemaker, VertexAi, AzureML

@

rachel

thank u runpod i was doing a training run for work when GCP and cloudflare died 🙏🙏 i appreciate u staying online it finished successfully

.webp)

@

skypilot_org

🏃 RunPod is now available on SkyPilot! ✈️ Get high-end GPUs (3x cheaper) with great availability: sky launch --gpus H100 Great thanks to @runpod_io for contributing this integration to join the Sky!

.webp)

@

Mascobot

Apparently, we got a Kaggle silver medal in the @arcprize for being in position 17th out of 1430 teams 🙃 I wish I had more time to spend on it; we worked on it for a couple of weeks for fun with limited compute (HUGE thanks to @runpod_io!)

@

YuvrajS9886

Introducing SmolLlama! An effort to make a mini-ChatGPT from scratch! Its based on the Llama (123 M) structure I coded and pre-trained on 10B tokens (10k steps) from the FineWeb dataset from scratch using DDP (torchrun) in PyTorch. Used 2xH100 (SXM) 80GB VRAM from Runpod

@

dfranke

Shoutout to @runpod_io as I work through my first non-trivial machine learning experiment. They have exactly what you need if you're a hobbyist and their prices are about a fifth of the big cloud providers.

@

oliviawells

Needed a GPU for a quick job, didn’t want to commit to anything long-term. RunPod was perfect for that. Love that I can just spin one up and shut it down after.

@

Yoeven

The @runpod_io event was amazing! One reason we can boast about fast speeds at @jigsawstack is because the cold boot on runpod GPUs is basically nonexistent!

@

winglian

Axolotl works out of the box with @runpod_io's Instant Clusters. It's as easy as running this on each node using the Docker images that we ship.

@

Dean Jones

Runpod has great prices as well

@

Pauline_Cx

I'm proud to be part of the GPU Elite, awarded by @runpod_io 😍

@

DrRogerThomp

Trained a 7B parameter model in just 90 minutes for $0.80 using LoRA + Runpod.

Yes, it’s possible—and no, you don’t need enterprise hardware.

@

Dwayne

Just discovered @runpod_io 🤯🤯🤯 Per second billing for serverless GPU capacity?! Infinitely scalable?! Whaaaat

@

qtnx_

1.3k spent on the training run, this latest release would not have been possible without runpod

@

othocs

@runpod_io is so goated, first time trying it today and it’s super easy to setup + their ai helper on discord was very helpful If you ever need cpus/gpus I recommend it!

@

berliangor

i'm a big fan of @runpod_io they're most reliable GPU provider for training and running your models at scale

@

SaaS Wiz

I love runpod

@

abacaj

Runpod support > lambdalabs support. For on demand GPUs runpod still works the best ime

@

jzlegion

ai engineering is just tweaking config values in a notebook until you run out of runpod credits

@

DataEatsWorld

Thanks @runpod_io, loving all of the updates! 👀

@

SuperHumanEpoch

I have been testing work with @runpod_io last 2 weeks and I've to say the service is pretty amazing. Super awesome UX and DevEX (and plenty of GPU backend choices). It's about ~20% pricier than Lambda labs, but worth it IMO given all the harness and workflow they provide that Lambda doesn't. I'm not associated with them in any way or manner, btw. Just a very happy customer.

@

AlicanKiraz0

Runpod > Sagemaker, VertexAi, AzureML

@

DrRogerThomp

Trained a 7B parameter model in just 90 minutes for $0.80 using LoRA + Runpod.

Yes, it’s possible—and no, you don’t need enterprise hardware.

@

dfranke

Shoutout to @runpod_io as I work through my first non-trivial machine learning experiment. They have exactly what you need if you're a hobbyist and their prices are about a fifth of the big cloud providers.

@

SaaS Wiz

I love runpod

@

Yoeven

The @runpod_io event was amazing! One reason we can boast about fast speeds at @jigsawstack is because the cold boot on runpod GPUs is basically nonexistent!

@

Pauline_Cx

I'm proud to be part of the GPU Elite, awarded by @runpod_io 😍

@

SkotiVi

For anyone annoyed with Amazon's (and Azure's and Google's) gatekeeping on their cloud GPU VMs, I recommend @runpod_io None of the 'prove you really need this much power' bs from the majors Just great pricing, availability, and an intuitive UI

@

YuvrajS9886

Introducing SmolLlama! An effort to make a mini-ChatGPT from scratch! Its based on the Llama (123 M) structure I coded and pre-trained on 10B tokens (10k steps) from the FineWeb dataset from scratch using DDP (torchrun) in PyTorch. Used 2xH100 (SXM) 80GB VRAM from Runpod

@

Dean Jones

Runpod has great prices as well

@

oliviawells

Needed a GPU for a quick job, didn’t want to commit to anything long-term. RunPod was perfect for that. Love that I can just spin one up and shut it down after.

@

DataEatsWorld

Thanks @runpod_io, loving all of the updates! 👀

@

Dwayne

Just discovered @runpod_io 🤯🤯🤯 Per second billing for serverless GPU capacity?! Infinitely scalable?! Whaaaat

@

SuperHumanEpoch

I have been testing work with @runpod_io last 2 weeks and I've to say the service is pretty amazing. Super awesome UX and DevEX (and plenty of GPU backend choices). It's about ~20% pricier than Lambda labs, but worth it IMO given all the harness and workflow they provide that Lambda doesn't. I'm not associated with them in any way or manner, btw. Just a very happy customer.

.webp)

@

Mascobot

Apparently, we got a Kaggle silver medal in the @arcprize for being in position 17th out of 1430 teams 🙃 I wish I had more time to spend on it; we worked on it for a couple of weeks for fun with limited compute (HUGE thanks to @runpod_io!)

@

abacaj

Runpod support > lambdalabs support. For on demand GPUs runpod still works the best ime

@

winglian

Axolotl works out of the box with @runpod_io's Instant Clusters. It's as easy as running this on each node using the Docker images that we ship.

@

rachel

thank u runpod i was doing a training run for work when GCP and cloudflare died 🙏🙏 i appreciate u staying online it finished successfully

@

jzlegion

ai engineering is just tweaking config values in a notebook until you run out of runpod credits

@

berliangor

i'm a big fan of @runpod_io they're most reliable GPU provider for training and running your models at scale

@

othocs

@runpod_io is so goated, first time trying it today and it’s super easy to setup + their ai helper on discord was very helpful If you ever need cpus/gpus I recommend it!

.webp)

@

skypilot_org

🏃 RunPod is now available on SkyPilot! ✈️ Get high-end GPUs (3x cheaper) with great availability: sky launch --gpus H100 Great thanks to @runpod_io for contributing this integration to join the Sky!

@

casper_hansen_

Why is Huggingface not adding RunPod as a serverless provider? RunPod is 10-15x cheaper for serverless deployment than AWS and GCP

@

qtnx_

1.3k spent on the training run, this latest release would not have been possible without runpod

FAQs

Questions? Answers.

Runpod Hub explained.

What is Runpod Hub?

Runpod Hub is a centralized catalog of preconfigured AI repositories that you can browse, deploy, and share. All repos are optimized for Runpod’s Serverless infrastructure, so you can go from discovery to a running endpoint in minutes.

Is Runpod Hub production-ready?

No—the Hub is currently in beta. We’re actively adding features and fixing bugs. Join our Discord if you’d like to give feedback or report issues.

Why should I use Runpod Hub instead of deploying my own containers manually?

One-click deployment: All Hub repos come with prebuilt Docker images and Serverless handlers. You don’t have to write Dockerfiles or manage dependencies.

Configuration UI: We expose common parameters (environment variables, model paths, precision settings, etc.) so you can tweak a repo without touching code.

Built-in testing: Every repo in the Hub has automated build-and-test pipelines. You can trust that the code runs properly on Runpod before you click “Deploy.”

Save time: Instead of cloning a repo, installing dependencies, and debugging runtime issues, you can launch a vetted endpoint in minutes.

Configuration UI: We expose common parameters (environment variables, model paths, precision settings, etc.) so you can tweak a repo without touching code.

Built-in testing: Every repo in the Hub has automated build-and-test pipelines. You can trust that the code runs properly on Runpod before you click “Deploy.”

Save time: Instead of cloning a repo, installing dependencies, and debugging runtime issues, you can launch a vetted endpoint in minutes.

Who benefits from using the Hub?

End users/Developers: Quickly find and run popular AI models (LLMs, Stable Diffusion, OCR, etc.) without setup headaches. Customize inputs via a simple form instead of editing code.

Hub creators: Showcase your open-source work to the Runpod community. Every new GitHub release triggers an automated build/test cycle in our pipeline, ensuring your repo stays up to date.

Enterprises/Teams: Adopt standardized, production-ready AI endpoints without reinventing infrastructure. Onboard developers faster by pointing them to Hub listings rather than internal deployment docs.

Hub creators: Showcase your open-source work to the Runpod community. Every new GitHub release triggers an automated build/test cycle in our pipeline, ensuring your repo stays up to date.

Enterprises/Teams: Adopt standardized, production-ready AI endpoints without reinventing infrastructure. Onboard developers faster by pointing them to Hub listings rather than internal deployment docs.

How do I deploy a repo from the Hub?

In the Runpod console, go to the Hub page.

Browse or search for a repo that matches your needs.

Click on the repo to view details—check hardware requirements (CPU vs. GPU, disk size) and any exposed configuration options.

Click Deploy (or choose an older version via the dropdown).

Click Create Endpoint. Within minutes, you’ll have a live Serverless endpoint you can call via API.

For a more details, check out the docs: https://docs.runpod.io/hub/overview

Browse or search for a repo that matches your needs.

Click on the repo to view details—check hardware requirements (CPU vs. GPU, disk size) and any exposed configuration options.

Click Deploy (or choose an older version via the dropdown).

Click Create Endpoint. Within minutes, you’ll have a live Serverless endpoint you can call via API.

For a more details, check out the docs: https://docs.runpod.io/hub/overview

How do I share my own AI repo in the Hub?

Prepare a working Serverless implementation in your GitHub repo. You’ll need a handler.py (or equivalent), a Dockerfile, and a README.md.

Add a .runpod/hub.json file with metadata (title, description, category, hardware settings, environment variables, presets).

Add a .runpod/tests.json file that defines one or more test cases to exercise your endpoint (each test should return HTTP 200).

Create a GitHub Release (the Hub indexes releases rather than commits).

In the RunPod console, go to the Hub and click Get Started under “Add your repo.” Enter your GitHub URL and follow the prompts.

Once submitted, our build pipeline will automatically scan, build, and test your repo. After it passes, our team will manually review it. If approved, your repo appears live in the Hub.

For a more details, check out the docs: https://docs.runpod.io/hub/publishing-guide.

Add a .runpod/hub.json file with metadata (title, description, category, hardware settings, environment variables, presets).

Add a .runpod/tests.json file that defines one or more test cases to exercise your endpoint (each test should return HTTP 200).

Create a GitHub Release (the Hub indexes releases rather than commits).

In the RunPod console, go to the Hub and click Get Started under “Add your repo.” Enter your GitHub URL and follow the prompts.

Once submitted, our build pipeline will automatically scan, build, and test your repo. After it passes, our team will manually review it. If approved, your repo appears live in the Hub.

For a more details, check out the docs: https://docs.runpod.io/hub/publishing-guide.

Clients

Trusted by today's leaders, built for tomorrow's pioneers.

Engineered for teams building the future.

.webp)

.webp)

![black-forest-labs / FLUX.1 Kontext [dev]](https://cdn.prod.website-files.com/67e1e36d3551f5a66e4095f5/68accefd4aa2af175d1a8349_black-forest-labs-flux-1-kontext-dev.webp)

.webp)

![black-forest-labs / FLUX.1 [dev]](https://cdn.prod.website-files.com/67e1e36d3551f5a66e4095f5/68acd0588d67b16bbe7f1a90_black-forest-labs-flux-1-dev.webp)