Runpod makes GPU infrastructure simple.

Go from idea to deployment in a single flow.

Runpod simplifies every step of your workflow—so you can build, scale, and optimize without ever managing infrastructure.

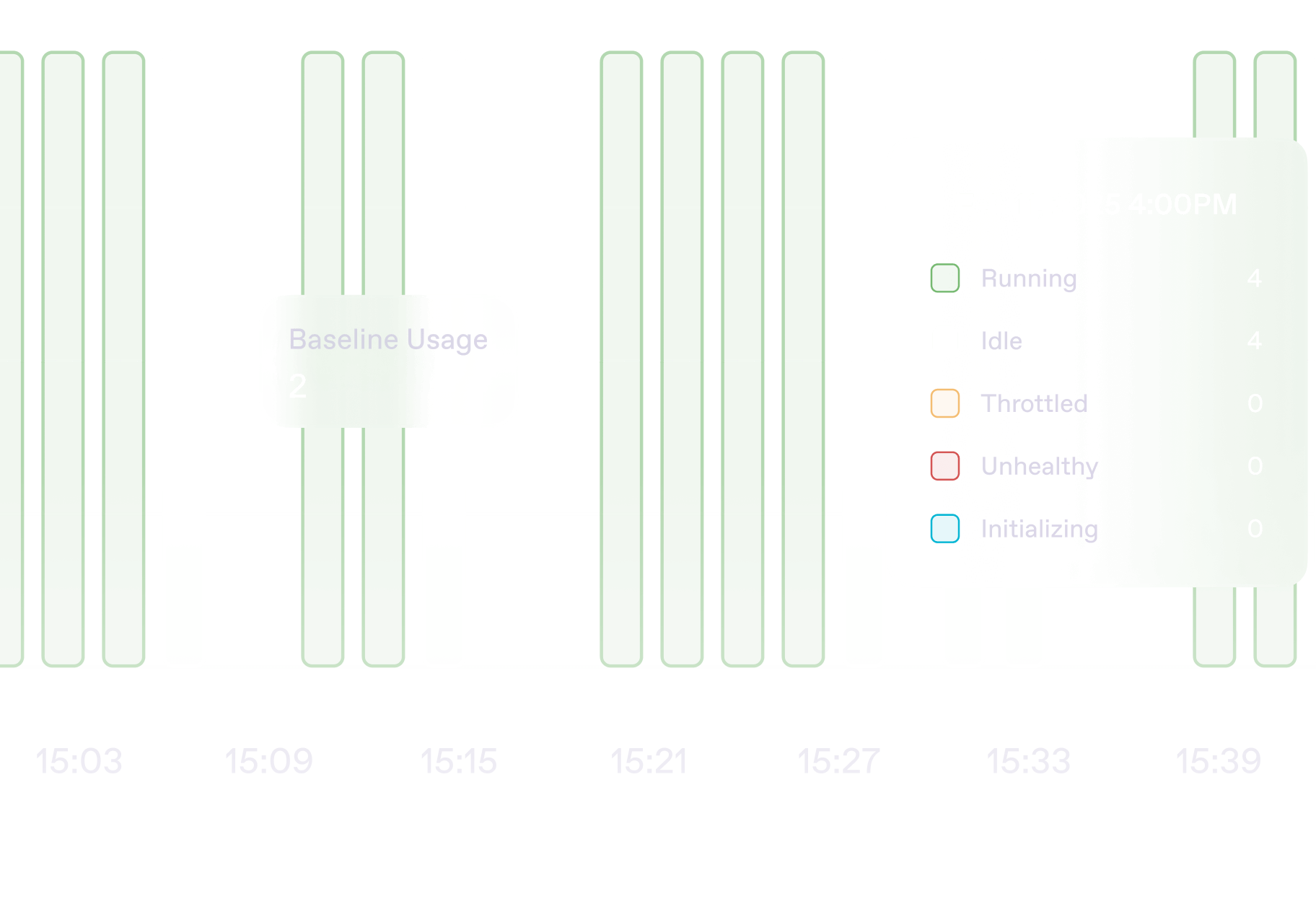

Enterprise grade uptime.

Managed orchestration.

Real-time logs.

Scale with Serverless when you're ready for production.

Autoscale in seconds

Zero cold-starts with active workers

<200ms cold-start with FlashBoot

Persistent network storage

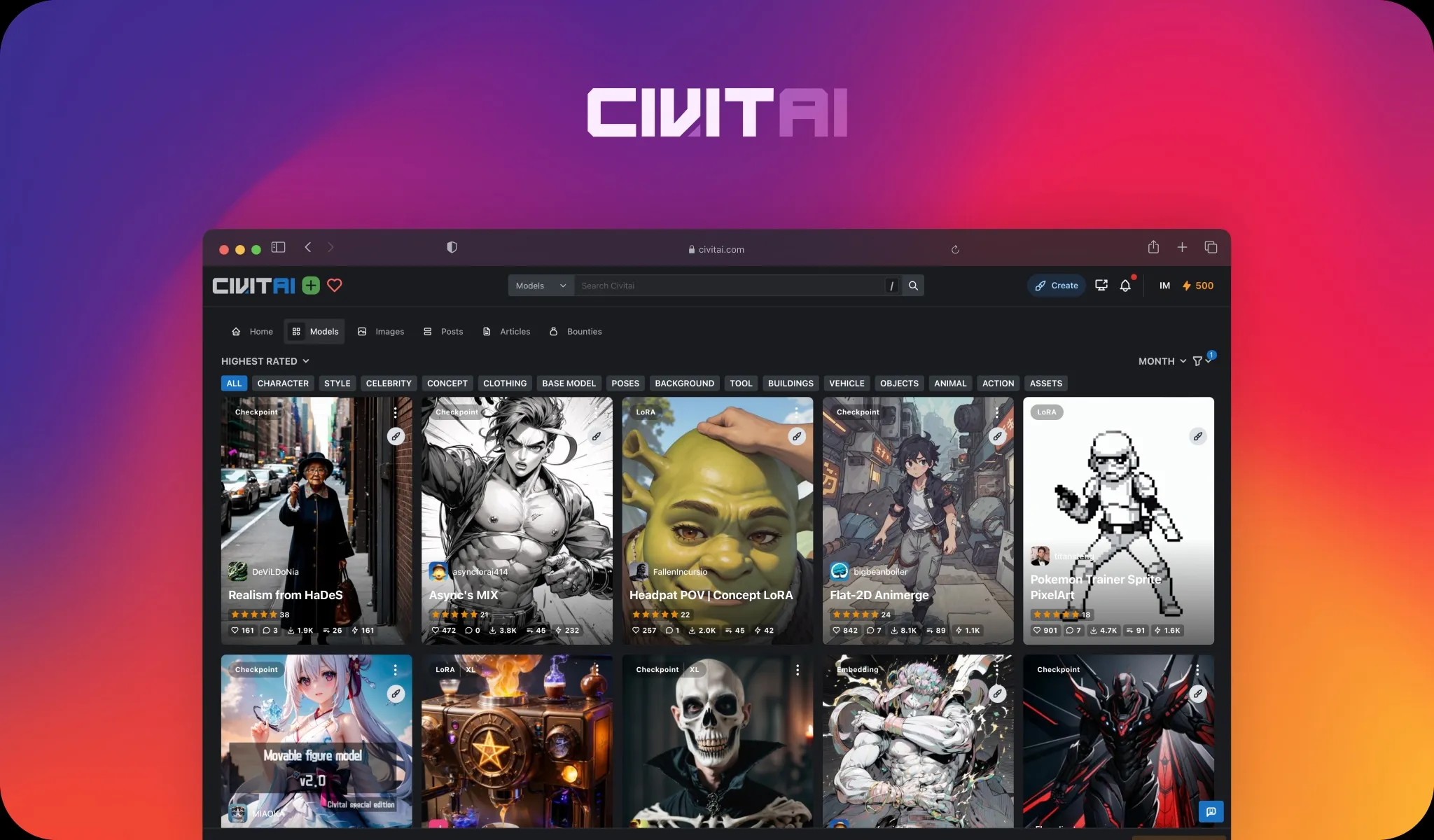

Loved by developers.

Get more done for every dollar.

>500 million

57%

Unlimited

Enterprise-grade from day one.

.webp)

99.9% uptime

Secure by default

Scale to hundreds of GPUs

.webp)